Achieving unmatched efficiency and performance

Compute-in-Memory

Boosting memory capacity and processing speed

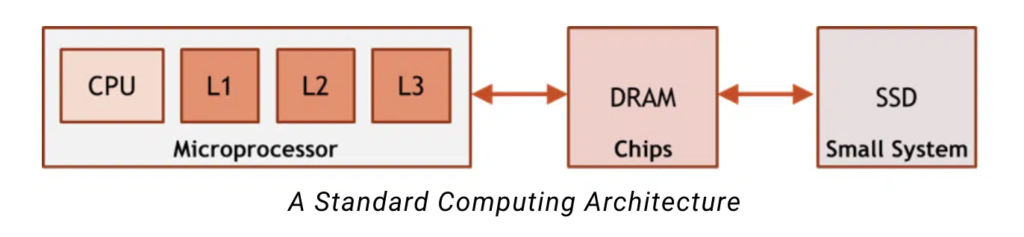

Today’s most common computing architectures are built on assumptions about how memory is accessed and used. These systems assume that the full memory space is too large to fit on-chip near the processor, and that we do not know what memory will be needed at what time. To address the space issue and the uncertainty issue, these architectures build a hierarchy of memory. The memory hierarchy near the CPU is small and fast and can support high frequency of use, while DRAM and SSD are large enough to store the bulkier, less time-sensitive data.

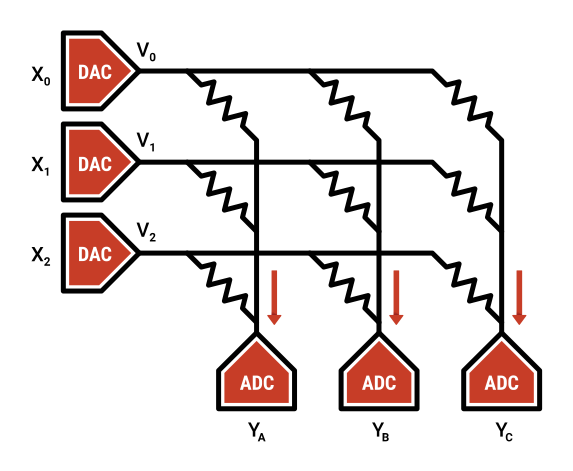

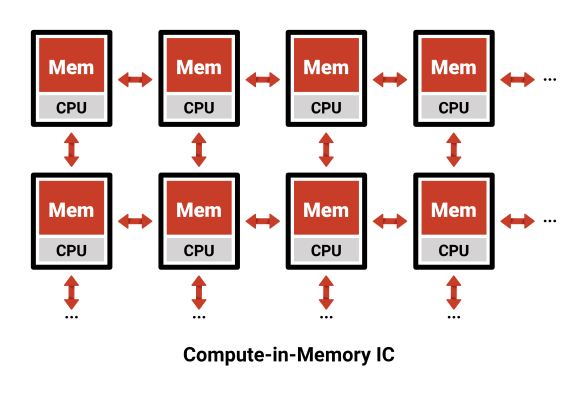

Compute-in-memory is built using different assumptions: we have a large amount of data that we need to access but we know exactly when we will need it. These assumptions are possible for AI inference applications because the execution flow of the neural network is deterministic – it is not dependent on the input data like many other applications. Using that knowledge, we can strategically control the location of data in memory, instead of building a cache hierarchy to cover for our lack of knowledge. Compute-in-memory also adds local compute to each memory array, allowing it to process the data directly next to each memory. By having compute next to each memory array, we can have an enormous memory that has the same performance and efficiency as L1 cache (or even register files).

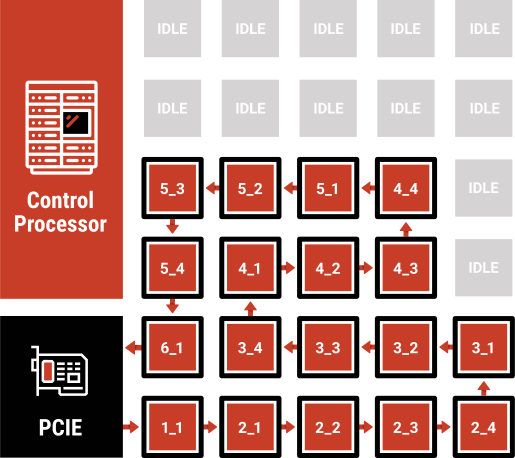

Dataflow Architecture

Maximizing inference performance through careful architecture design