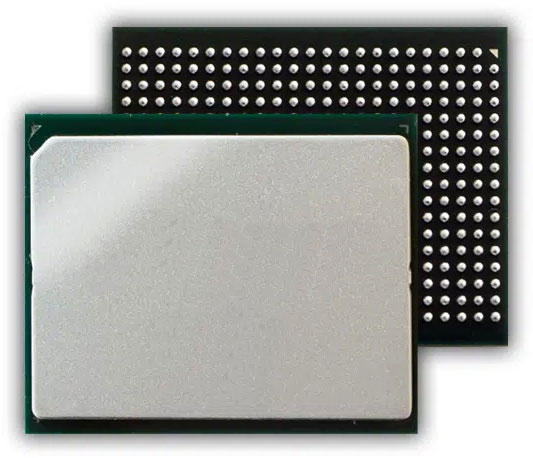

V1076 Analog Matrix Processor

Overview

The V1076 Volga AMP™ delivers up to 25 TOPS in a single chip for high-end edge AI applications. The V1076 integrates 76 AMP tiles to store up to 80M weight parameters and execute matrix multiplication operations without any external memory. This allows the V1076 to deliver the AI compute performance of a desktop GPU while consuming up to 1/10th the power – all in a single chip.

Features

- Array of 76 AMP tiles, each with a Volga Analog Compute Engine (Volga ACE™)

- On-chip DNN model execution and weight parameter storage with no external DRAM

- Execution of models at higher resolution and lower latency for better results

- 4-lane PCIe 2.1 interface with up to 2GB/s of bandwidth for inferencing processing

- 19mm x 15.5mm BGA package

- Capacity for up to 80M weights – able to run single or multiple complex DNNs entirely on-chip

- Deterministic execution of AI models for predictable performance and power

- Support for INT4, INT8, and INT16 operations

- Available I/Os – 10 GPIOs, QSPI, I2C, and UARTs

- Typical power consumption running complex models 3~4W

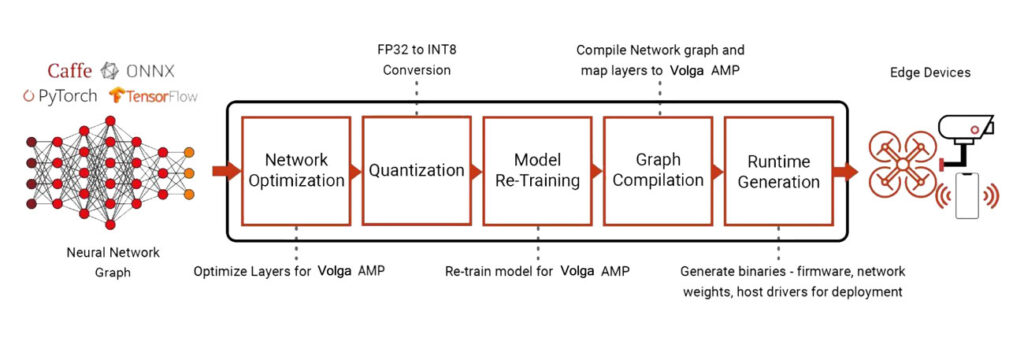

Workflow

DNN models developed in standard frameworks such as Pytorch, Caffe, and TensorFlow are implemented and deployed on the Volga Analog Matrix Processor (Volga AMPTM) using Volga’s AI software workflow. Models are optimized, quantized from FP32 to INT8, and then retrained for the Volga Analog Compute Engine (Volga ACETM) prior to being processed through Volga’s powerful graph compiler. Resultant binaries and model weights are then programmed into the Volga AMP for inference. Pre-qualified models are also available for developers to quickly evaluate the Volga AMP solution.

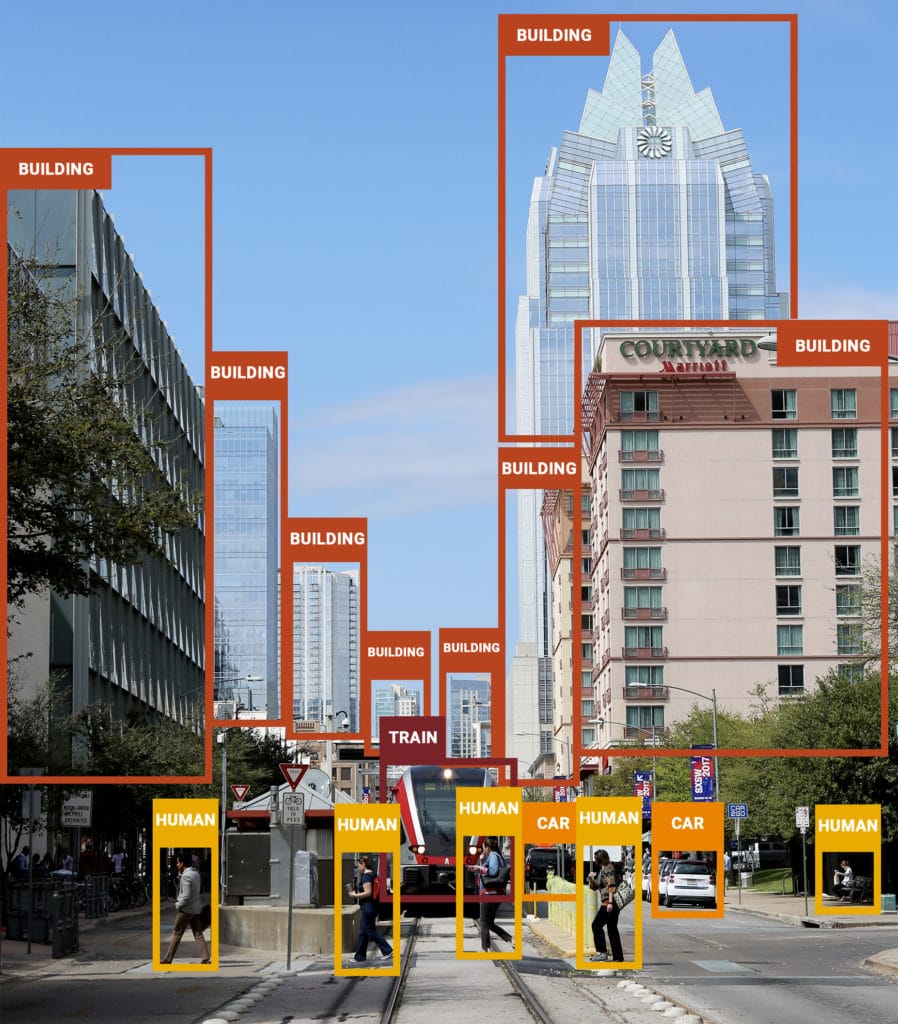

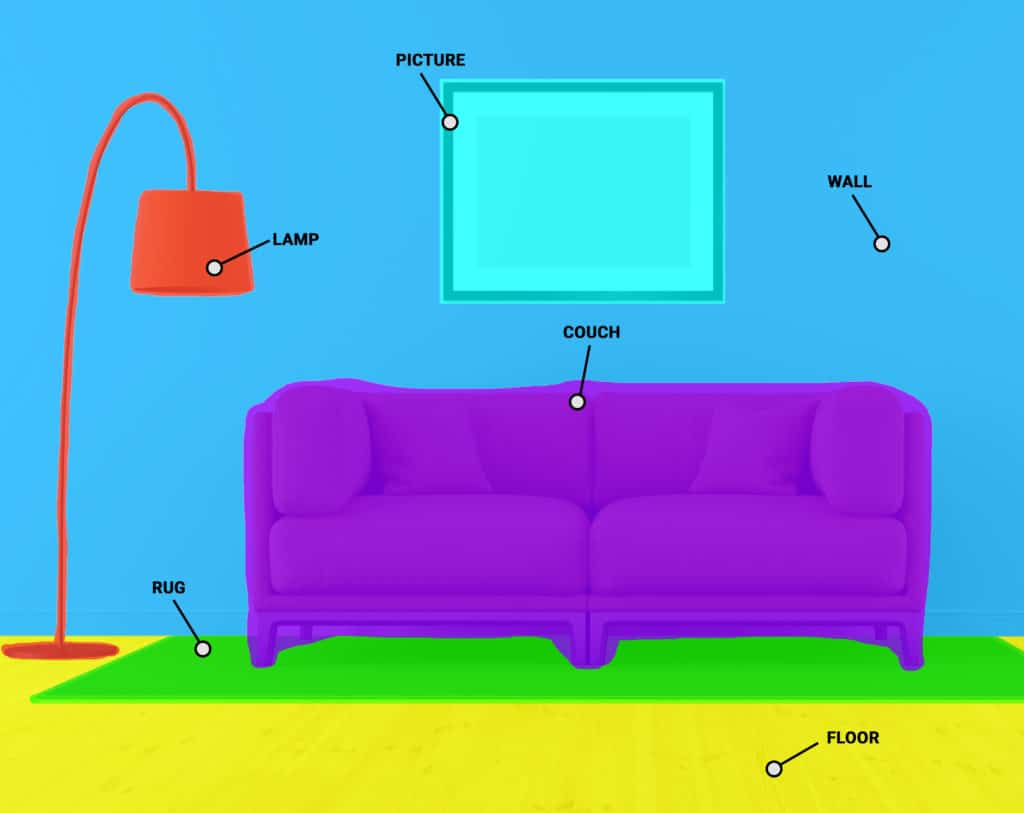

DNN Model Library

Volga provides a library of pre-qualified DNN models for the most popular AI use cases. The DNN models have been optimized to take advantage of the high-performance and low-power capabilities of the Volga Analog Matrix Processor (Volga AMPTM). Developers can focus on model performance and end-application integration instead of the time-consuming model development and training process. Available pre-qualified DNN models include: